what to do with a language model in python

Implementing a character-level trigram language model from scratch in python

Predicting is difficult, but it tin be solved in small bits, like predicting the side by side few words someone is going to say or the next characters to consummate a give-and-take or judgement being typed. That'south what we are going to attempt to do.

The complete lawmaking for this article can be found Here

What is an N-gram

An Northward-gram is a sequence of n items(words in this case) from a given sample of text or speech. For example, given the text " Susan is a kind soul, she will help yous out equally long as it is inside her boundaries" a sample n-gram(s) from the text above starting from the beginning is :

unigram: ['susan', 'is', 'a, 'kind', 'soul', 'she', 'volition', 'help'………….]

bigram: ['susan is', 'is a', 'a kind', 'kind soul', ' soul she', 'she will', 'will assist', ' assist y'all'………….]

trigram: ['susan is a', 'is a kind', 'a kind soul', 'kind soul she', 'soul she will', 'she will help you'………….]

From the examples above, nosotros can come across that n in north-grams can be different values, a sequence of 1 gram is called a unigram, ii grams is chosen a bigram, sequence of iii grams is called a trigram.

Trigram models

We volition be talking about trigram models in this article.

A bigram model approximates the probability of a give-and-take given all the previous words by using only the conditional probability of the preceding words while a trigram model looks two words into the past.

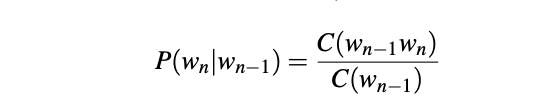

So based on the above, to compute a particular trigram probability of a word y given a previous words 10, z, nosotros'll compute the count of the trigram C(xzy) and normalize past the sum of all the trigrams that share the aforementioned words x and z, this can be simplified using the equation below:

That is, to compute a item trigram probability of the word "soul", given the previous words "kind", "hearted", we'll compute the count of the trigram C("kind hearted soul") and normalize by the sum of all the trigrams that share the same start-words "kind hearted".

We always represent and compute language model probabilities in log format equally log probabilities. Since probabilities are (past definition) less than or equal to 1, the more probabilities we multiply together, the smaller the product becomes. Multiplying enough n-grams together would result in numerical underflow, so we use the log probabilities, instead of the raw probabilities. Calculation in log space is equivalent to multiplying in linear space, and then we combine log probabilities past adding them.

Code

Nosotros will be using a corpus of data from the Gutenberg projection. This contains passages from different books. Nosotros will be predicting character graphic symbol-level trigram language model, for instance, Consider this sentence from Austen:

Emma Woodhouse, handsome, clever, and rich, with a comfortable home and happy disposition, seemed to unite some of the best blessings of existence; and had lived nearly twenty-ane years in the world with very picayune to distress or vex her.

The following are some examples of character-level trigrams in this judgement:

Emm, mma, Woo, ood, …

First, we will do a niggling preprocessing of our data, we will combine the words in all the passages equally 1 large corpus, remove numeric values if any, and double spaces.

def preprocess(self):

output = ""

for file in self.texts:

with open(os.path.join(os.getcwd(), file), 'r', encoding="utf-8-sig", errors='ignore') as suffix:

sentence = suffix.read().split up('\n')

for line in sentence:

output += " " + line

return output Adjacent is the code for generating our north-grams, we will write a general office that accepts our corpus and the value describing how nosotros would like to carve up our n-grams. See beneath:

Next, we build a role that calculates the give-and-take frequency, when the discussion is not seen we will for this case smooth by replacing words with less than 5 frequency with a general character, in this example, UNK.

def UNK_treated_ngram_frequency(self, ngram_list):

frequency = {}

for ngram in ngram_list:

if ngram in frequency:

frequency[ngram] += ane

else:

frequency[ngram] = 1 sup = 0

result = {}

for chiliad, v in frequency.items():

if v >= 5:

result[k] = v

else:

sup += v

upshot["UNK"] = sup

return event

Next, we accept our trigram model, nosotros will use Laplace add-one smoothing for unknown probabilities, we will also add together all our probabilities (in log infinite) together:

Evaluating our model

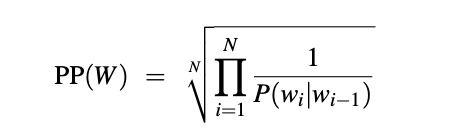

There are 2 different approaches to evaluate and compare language models, Extrinsic evaluation and Intrinsic evaluation. We will exist evaluating intrinsically considering it is a useful way of chop-chop evaluating models. Nosotros will evaluate with a metric called Perplexity, this is an intrinsic evaluation method, not as good as evaluating intrinsically though, the article HERE can explain the evaluation concepts better.

Nosotros will mensurate the quality of our model by its performance on some examination data. The perplexity of a language model on a test set is the inverse probability of the test set, normalized by the number of words. Thus the higher the conditional probability of the discussion sequence, the lower the perplexity, and maximizing the perplexity is equivalent to maximizing the test prepare probability co-ordinate to the language model.

For our instance, we will be using perplexity to compare our model against two test sentences, i English language and another French.

Perplexity is calculated as:

Implemented equally:

def perplexity(total_log_prob, Due north):

perplexity = total_log_prob ** (ane / Due north)

return perplexity Testing both sentences below, nosotros get the following perplexity:

perp = cocky.perplexity(sum_prob, len(trigram_value))

print("perplexity ==> ", perp) English language Judgement: perplexity of 0.12571631288775162

If nosotros practice not change our economic and social policies, we will be in danger of undermining solidarity, the very value on which the European social model is based.

The rapid migrate towards an increasingly divided social club is happening not just in Europe but also on a much wider scale. An entire continent, Africa - about which you made a highly relevant betoken in your oral communication, Prime Minister - has lost contact even with the developing world.

We must do everything in our ability to stop this unjust development model and to give voices and rights to those who take neither.

Ladies and gentlemen, Prime Minister, the Laeken Height and annunciation are also vitally important for another reason.

Laeken must decide how we are to structure the second phase of the 'Time to come of Europe' debate. French Judgement: perplexity of 0.21229602165162492

Je suis reconnaissante à la Committee d' avoir adopté ce programme d' action.

Cependant, la condition de son applicabilité est que les personnes atteintes d' united nations handicap puissent disposer des moyens financiers nécessaires, et qu' elles aient la possibilité purement physique de passer les frontiÚres.

Il serait intéressant de savoir si la Commission est aussi disposée à débloquer des fonds en faveur des personnes handicapées, pour qu' elles puissent, elles aussi, parcourir le monde, aussi loin que pourra les emmener le fauteuil roulant.

J'ai mentionné la directive que la Commission a proposéeast pour l'aménagement des moyens de transport collectifs, afin que les handicapésouthward puissent les utiliser.

Le Conseil n'a pas encore fait avancer cette question, qui en est au stade de la concertation. As expected, our model is quite perplexed by the french sentence, This is expert plenty at the moment, however, our model can be improved on.

Comments and feedbacks are welcome.

Don't forget to click the follow button.

Source: https://towardsdatascience.com/implementing-a-character-level-trigram-language-model-from-scratch-in-python-27ca0e1c3c3f

0 Response to "what to do with a language model in python"

Post a Comment